Unlike normal flat video, that is played on a flat screen, panoramic videos do not need to follow such a prescriptive formula. There is a massive opportunity for creative expression and experimentation at this stage in the process, made all the more prevalent as there are no set standards for the medium yet.

Curved screens, Rooms covered with monitors, “immersive” screens harkening back to the days of Quicktime VR are all still available and open for experimentation but today,we have more personalised ways of sharing, in the form of head mounted displays and mobile phones.

This chapter presents some current solutions for getting your film out into the world, starting with the anatomy of a VR video player, followed by the two main display methods, namely Head mounted displays and traditional monitors.

Today, a film can be quite easily distributed on a number of readily available platforms, including facebook, youtube, and Samsung’s Milk VR to name but a few. For more bespoke solutions, places to experiment and add unique interactions, Unity can be used to create stand-alone video experiences with incredibly simple integration with the Oculus and Google’s cardboard viewers.

The merit of each method depends largely on the needs of the filmmaker, the story and the resolution available. The size of your audience also decreases as you travel up this totem pole of technology, with web-streaming services like Youtube forming the foundation and dedicated head-mounted displays (oculus and vive) at the top end. It is possible for a video to be released across the board though, as the largest differentiating factor between them being their ability to deliver different resolutions to the viewer.

As with the previous chapters, the basic principles of the player are covered first, with some ready to use software links. For those interested in creating their own video players, two possible approaches are shown at the end, one using unity and another using the open source coding platform openframeworks.

Quick Navigation

The Anatomy of a panoramic Video player

Head Mounted Displays (HMDs)

VR on the Web

Hitting the limits of H264

Roll your own Pano-player

Downloads

Further Reading

The Anatomy of a panoramic Video Player

After all the effort put into transforming the world from a spherical capture to a flat image, presenting your film requires that process to be reversed. The simplest player for VR-video is simply a way to convert from latlong encoded picture to a (limited) normal projection. Limited meaning that only a portion of the image will be seen at any one time, and which portion is being viewed is controlled by the audience, either through traditional input like a mouse or a touchscreen, or some integrated input method like the gyroscope in a mobile phone or head-mounted display. The process is essentially identical to those used in post-production, albeit that they now need to function reliably in real time.

A straightforward method to display a panoramic video is to map it to a sphere and have a playback camera in the center. The field of view of the camera becomes the view of your audience. Whether this mapping is literal, and done inside a 3D application like Unity or computational, through a mathematical formula, the results are the visually identical.

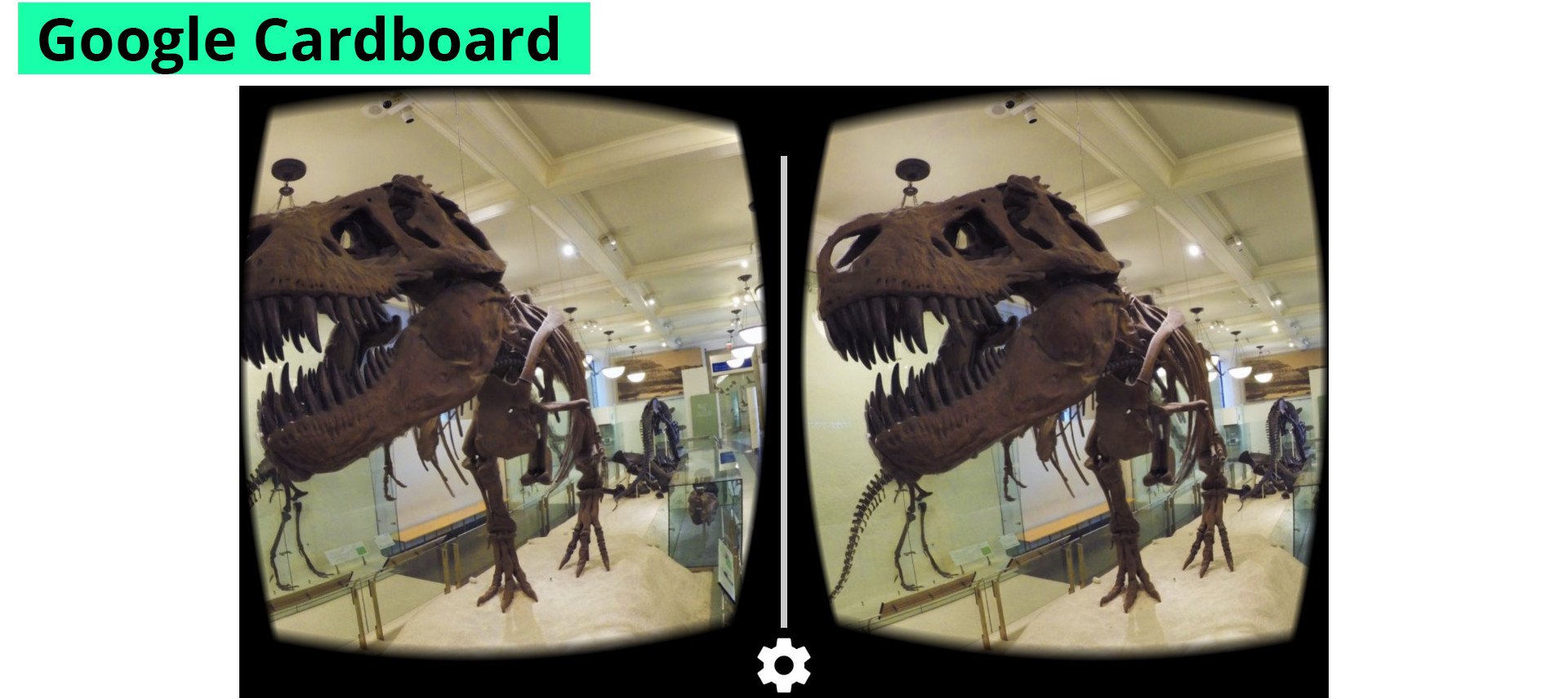

For players on a head mounted display, like the oculus, or cases for mobile phones like the Samsung Gear VR and Google Cardboard, the view needs to be duplicated for each eye. Head mounted displays offer the ability to show content in stereo, much like 3D cinema. Stereo productions are many times more complicated and are discussed in the Advanced topics chapter.

Technically, the virtual cameras for head mounted displays should be offset a small amount, to capture a little more of the world on their respective sides, as your eyes do away from your nose. In practice however, this fact is usually ignored in favour of simplicity. Many players designed for head mounted displays do add an amount of barrel distortion along with a heavy vignette around the view of each eye. The barrel distortion counteracting any distortion caused by the eye-lenses in the display, whilst the vignette softens the edges so the pictures more gradually fades away towards the periphery.

The audience needs to have some way of interacting with the player, and may be as simple as clicking and dragging inside the video to change the view. When used on a mobile phone, the phone’s orientation can be linked to the video and people can look around the video as though their phone were a window into another world.

A more sophisticated or memory efficient way of distribution may involve converting from a latlong to a cube map, thereby creating a more “computer-friendly” file, most players however, including youtube and facebook, still expect latlong files.

FRAMERATES – MORE IS MORE

There are two distinctly separate frame rates to consider when talking about virtual reality. The first being the frame rate of the video being played, the other is the rate at which the player updates its view of that video. It is important to discuss them separately and a good player will reflect this whereas a bad one will lock these two together.

While 24 frames per second may be sufficient for persistence of vision, if the player does not update it’s view at least twice as often, the video may appear to stutter, thereby undermining the immersive nature of the content. Moreover, a stuttering player on a head-mounted display can cause motion sickness. The jury is still out on which magic number the world settles on, but suffice it to say that it will be a rather large number. The Sony Morpheus for example, is slated to refresh at least 90 times per second.

At the moment, the limiting factor for the frame rate of the player is the display itself. Most modern displays have a maximum refresh rate, the speed at which a picture can be redrawn per second, of between 60 and 75Hz. Mobile phones too, have the same limit – generally 60 Hz and so even the best player cannot draw the scene more than 60 times per second. Fortunately, for all but the most extreme head-movements, this is adequate.

The creator of the experience may decide on the frame rate of the content, and I encourage experimentation in this regard. Some fast moving actions may not appear as smoothly in 360 compared to normal video, but this may very well create an interesting effect or be exploited to create a mood for the piece. Very high frame rate video may alleviate this problem, but under many circumstances this only adds to the processing overhead whilst doing little for bettering the experience.

Determining the View of your Audience

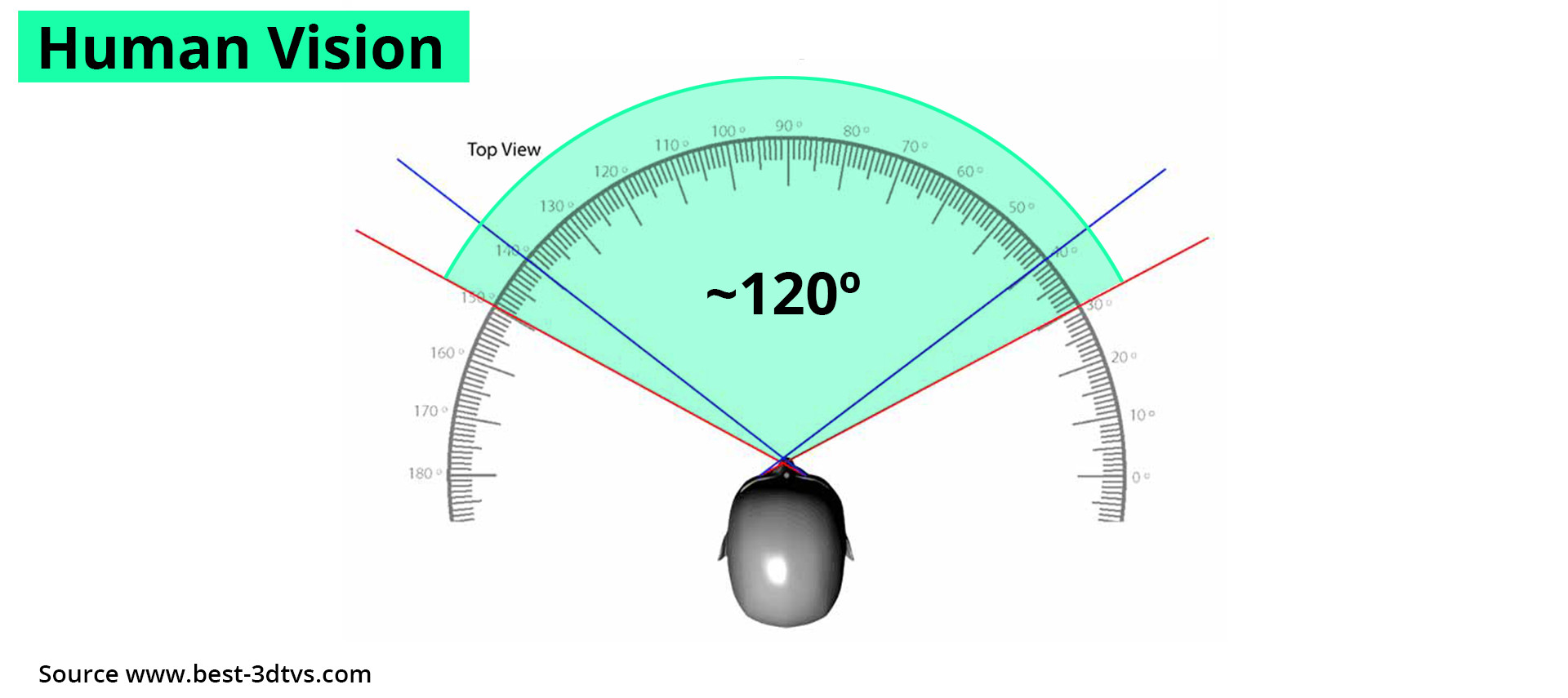

The focal length (field of view) of the camera becomes the view the audience can move around. For films that aim to recreate a “normal” view on the world, like a documentary for example, the lensing of the camera should be set close to the natural field of view of human vision, somewhere between 120 and 180 degrees. Though a 180-degree view is unattainable as a flat projection and will look wildly distorted.

The field of view of the human eye also stretches into the periphery, an area not present for a screen mounted flat in front of your eyes. For most viewers the field of view is similar to viewing the world through a rectangular hole cut from a piece of paper.

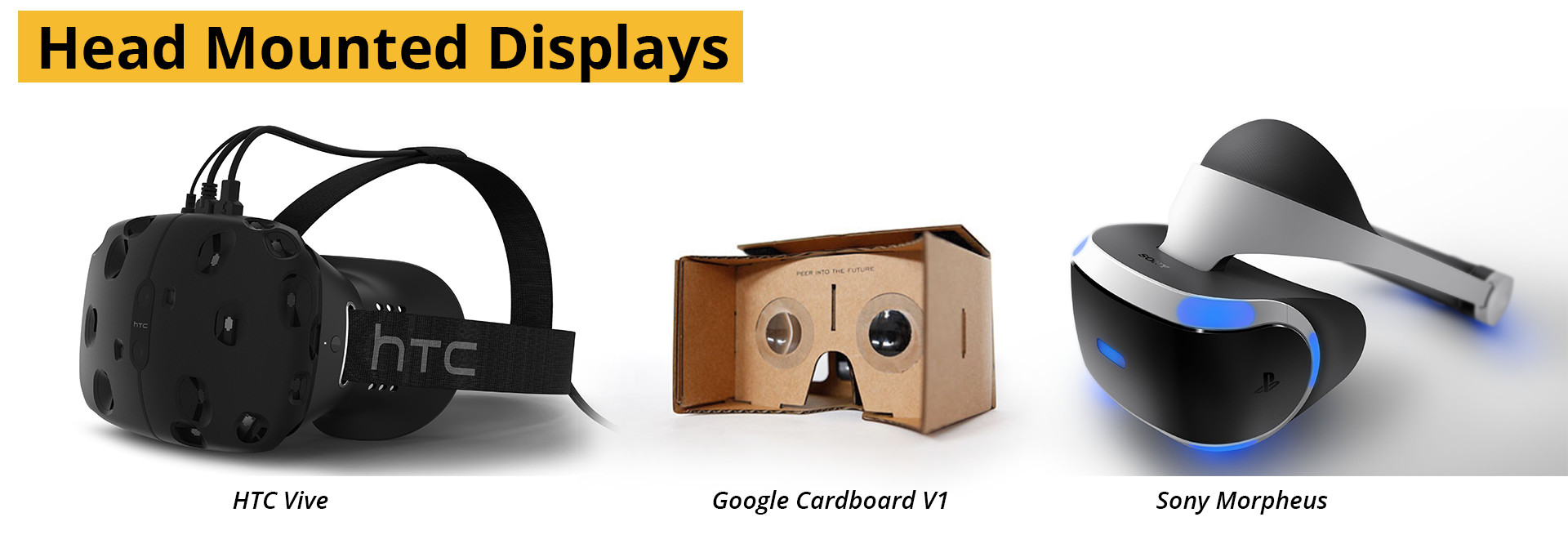

Head Mounted Displays (HMD)

A Head mounted display, is as the name implies a monitor, or monitors, worn on a person’s head. A set of glass or plastic lenses bring the screens into sharp focus aiming to cover as much of your field of view as possible. Some need to be attached to a computer, like the Oculus Rift, HTC Vive and Sony Morpheus. Others are simply cases with lenses that can accommodate mobile phones. The content is processed on the phone and displayed on the screen. Samsung’s Gear VR, the Zeiss One and Google’s Cardboard are examples of mobile Head Mounted displays.

All of the dedicated HMDs have to ability to track position alongside rotation, but this ability should be disabled during video play back as there is no positional information. In fact, under some circumstances the movement of the camera can cause the sphere to become visible through the change in perspective – undermining the experience.

A number of video players for the Oculus are available for these players, many free with a variety of projection options.

Cineveo

Infinity VR Drive-In

Kolor Eyes Desktop

Maximum VR Movies (MaxVR)

Oculus Video Player (Total cinema360)

RiftMax Theater

VLC VR

VR Player

VR Video Bubbles

Whirligig

For Mobile, the list is more limited, once you move past youtube. They are also dependent on the phone, and few cross platform players exist. For Samsung Gear there is Oculus Cinema and for google cardboard on Android, VR Player. Though many more may be on their way.

Apple is yet to stake a claim on VR and the app store has a very stringent policy on apps that allow unmanaged content to be added to apps after the fact – such as third party video players. Some however, like Kolor Eyes allow limited video downloads from the web. Most VR-films for iPhone are created as standalone apps using Unity or native coding, the process for creating such an app are shown at the end of the chapter.

VR on the web

By far and large the easiest way to distribute panoramic video is the internet, with a massive potential audience. Any modern browser that is webGl capable has the ability to view 360 video content right inside the browser, with many extending this onto mobile phones. On phones that have a gyroscope, the video can be navigated by rotating the phone in space.

Both youtube and Facebook now have the option to upload 360 video content directly and can be viewed on any browser, or in the app. For youtube, a special metadata injector needs to be used in order to tell the youtube uploader that the video needs to be played differently.

For self-hosted sites, some three.js based players are freely available, some extending their support to HMD’s as well, straight from the browser. EleVR is an excellent research group that has open sourced their player code and offer downloadable content as well.

Hitting the limits of H264

Doing for video what mp3 did for audio and jpeg did for photography, h.264 is the standard for video compression on the web, on mobile phones and desktops. It far surpasses any other format for its reach, ease of use, excellent compression and quality. Almost all the video content on the internet is currently H264, and consequently as is the VR content. Some film makers have already discovered the hidden limits of the codec though.

H.264 was never intended to be used for the super resolutions that are easily achieved and often required by VR. The specification of H.264 has a maximum allowable standard resolution of 3840×2160 pixels, or a total of 9 megapixels in any other arbitrary arrangement. Given the optimal resolution for a panoramic video can be many times this, especially when using a particularly high resolution HMD, the codec is showing its age. The next generation of video encoding is slowly being adopted, and will offer some relief.

The “High Efficiency Video Codec” (HEVC) or H.265 is slated to replace it, offering resolutions up to 4 times that of h.264 at comparable file sizes. Currently youtube is the first to adopt this new compression scheme. Even though are no commercial monitors capable or displaying this resolution, VR-video will most directly be able to benefit from these extra pixels. Although roll-out has been slow as far as industry wide adoption is concerned, those who are using standalone applications or in need of very high-resolution video playback can take advantage of it already.

Roll your own Pano-player

Although there are a number of ways to play a video, you may still choose to create your own for a presentation. Whether a project needs a special camera, extra post-effects, unique sound or even just to understand the process more thoroughly. Below are two ways of creating a 360-degree video player, one for desktop as a full screen player controlled by a mouse built in Unity and the second a 360-video and Google Cardboard player created in Openframeworks.

Using Unity

Unity is a games engine, not directly designed to support video playback. But there are ways around that. Renderheads Quicktime and Windows Media plugins offer excellent video playback directly integrated into Unity should the existing abilities fall short. To adapt the project for use on Google’s cardboard, you’ll need to download the unity package here.

Furthermore, neither iOs nor android directly support video as texture – this is a long standing problem that will hopefully one day be addressed. For android, the plugin mobile movie texture is available at a reasonable price. No such plugin exists for iphones. For more fluid playback and control, I recommend having a look at openframeworks, a cross platform coding platform.

Using Openframeworks

Openframeworks is a community driven open source project aimed at making creative coding accessible to artists and creators. They have a fantastic community and the software is built from the ground up to be as cross platform as possible, often letting you compile for multiple platforms with no modification to the code at all.

The code is very simple, and rather than duplicate it here, the code can be found on my github in the download section below.

Downloads

Simple 360 / Cardboard App on GitHub

Further Reading

Why Pretty Much All 360 VR Videos Get It Wrong

How fast does Virtual Reality need to be.

Fighting Motion Sickness due to Explicit Viewpoint Rotation

SITE LINKS

INTRODUCTION CHAPTER 2 / THE BASICS CHAPTER 2 / CAPTURECHAPTER 3 / THE STITCHCHAPTER 4 / POST-PRODUCTIONCHAPTER 5 / DISTRIBUTIONADDENDUM / ADVANCED