There are so many more fascinating technologies related to virtual reality that to talk about all of them, would be to talk forever. During the course of the previous chapters a number of questions surely sprang to your mind and I hope to address at least some of them here. Maybe one day all of the chapters could be condensed into a new introduction paragraph, and all of these may be discussed as a new level of virtual filmmaking.

Quick Navigation

Stereoscopic Panoramas

Video as 3 Dimensional Medium

Further Reading

Stereoscopic Panoramas

Stereoscopic means two. Two eyes, one for each of ours, because we see the world twice over and understand things like distance, perspective, speed, acceleration and a whole slew of unconscious things because of this. Cinema has been trying, and trying because it’s still not perfect, to make movies that give us that same experience in the cinema. In fact, we’ve been trying to do it since the day we invented a camera.

For VR, this idea gets especially tricky. To capture a scene in a way that creates not one, but two panoramic images that when shown one to each eye creates a consistent and believable feeling of depth, is hard. But maybe not because of the reasons you’d imagine.

What makes stereo so hard?

The problem with stereo is two fold. On the one hand we have trouble, immense trouble in fact, in creating a perfectly parallax free image of the world – just for one eye. If that process is doubled, and there are inconsistencies in where things are to each eye, not only is the illusion lost but you’ve just created a sure-fire headache for your audience as their brains work overtime to reconcile those differences.

On the other hand we have a problem of viewpoints. Ideally, any orientation our viewer can take, wherever they look – we need to give them two pictures. Those pictures always have to have been captured slightly offset by the same distance. (Our eyes can not move around in our heads) When making a movie, that’s rather simple in principle – just put two cameras side by side and start filming. If we did that in VR – things go pear shaped the moment someone starts to turn their head.

As we turn our heads, the effect disappears (the cameras see almost the same thing) but worse than that, when we turn around the views (left and right) become swopped!

To solve this, many filmmakers are trying to emulate cinema’s way of capturing stereo – placing pairs of cameras side by side all the way around a sphere. The idea is this – if we capture enough pairs, and then stitch all the left eyes for one view and all the right eyes for the other – we’ll get stereo. And you do… to an extent.

You can only fit so many cameras into a rig, no matter how small they are. Human eyes, can reach millions of possible positions, even if all we do is slowly rotate our heads. But a rig of cameras can only represent a few of those, so what happens to all the small increments? Well, nothing, they don’t exist.

We would need very many more cameras, pointing in very many more directions, stitched in a very complicated way to even approach a level of stereo filmmaking. When we consider the fact that NO physical camera system can capture a perfectly parallax free image in 360 degrees the problems become a little easier to comprehend. If we can’t adequately insure that even one view is correct, how to do we it for all possible views?

Even if we could build a camera that captured hundreds of views, even more complicated than even the most ambitious Jaunt or Google or hobby camera, there are fundamental problems in capturing that many viewpoints. How do we know, which view should be stitched? Eyes are not always perfectly level and neither are they always looking straight ahead. We can tilt our heads, it’s second nature, but it ruins everything from a stereo playback perspective. Because the images were stitched assuming a certain posture on your behalf, images were matched as though your eyes were level and now they aren’t.

This problem, and it’s related challenges are neatly described in the hairy ball theorem. The idea is roughly: You have a ball; picture a longhaired tennis ball for example, though any ball will do. But it has to be completely covered in hair, everywhere. And you have to comb all the hair so that it lies flat.

No matter how you well you comb, or which way you go about it, there will be one part where the hair will stand up – away from the ball. This is such a simple but fundamentally frustrating idea.

The problem of the hairy ball is the same as that of stereo 360 capture, if you imagine that each strand of hair is a possible view into the world and they need to be paired together to create stereo. It doesn’t matter how, or in how many ways you go about pairing the cameras up to create the panoramas, at some stage you’re going to end up with views that can’t be paired up anymore. Vi from EleVR did an exceptionally easy to follow explanation of the theory, and I recommend reading it here.

There are more than a few stereo VR videos being made, and to be honest, a lot of them are breathtaking. But all of them will suffer from this problem. Fundamentally, it is not possible to represent all the millions of possible view combinations that our eyes can create using only 2 flat images. If the viewer, the person with the headset, plays along and doesn’t go to wild with their head movements all is well, but lean in, tilt your head around or lean all the way back (flipping the world) as some more adventurous viewers immediately do, and the illusion is broken.

Using a 3D renderer helps. The ability to capture parallax free with an unlimited amount of cameras let’s you do things that are literally impossible in the real world. The stereo panoramic render mode for Arnold, for example, has incredible fidelity and can handle mild head tilting as well – because of the complexity in the “views” combined. But even these idealized virtual cameras run into the hairy ball problem at the poles, and it is recommended that the “stereo-ness”, the amount of disparity between the two eyes be reduced as we near the top and the bottom of the picture. The problem, fundamentally, lies in the storage and playback of stereoscopic information, more than our ability to create them.

So is truly stereo panoramic video impossible? Yes.

But only if you think video has to stay fundamentally a flat medium.

Video as a 3 Dimensional Medium

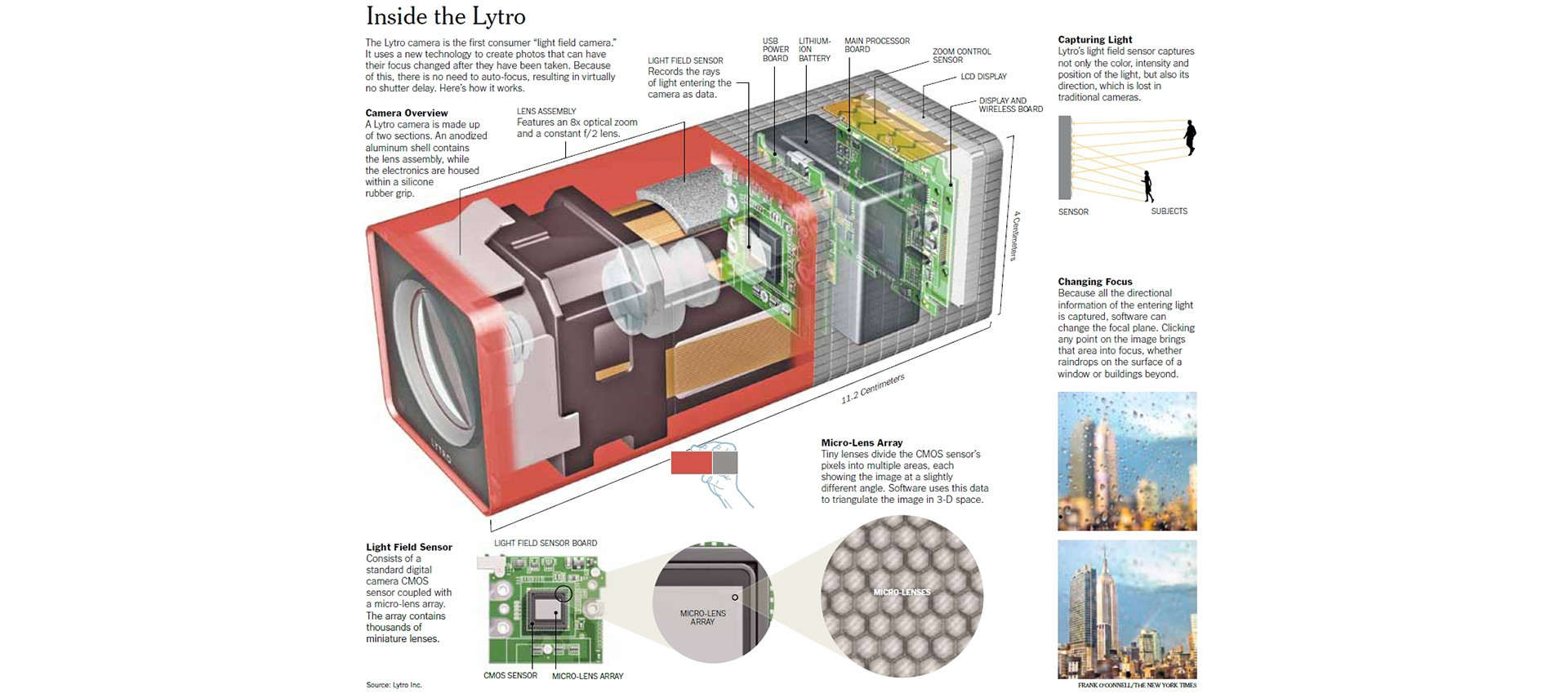

For a number of years a new field has been growing from strength to strength, developing little technological oddities, curious and widgets. Areas where a little computer attached to a little camera could do things that a big camera could. One such widget was a lightfield camera – the lytro as the first commercial product was called.

The lytro had hundreds of small lenses and a little computer to remember all of them. When you took a picture, you weren’t just taking it from one “eye” but in fact many hundreds. Each one slightly offset from the one before. By combining these images using some inspired mathematics and more than a little help from the hardware you were left with an flat image… But this was no ordinary picture. Because along with the regular stuff, the red greens and blues that make white – white, there were layers of extra information. Information about the distance to your subjects and what lay behind them. What the makers of the first Lytro camera decided to use this information for was to let the photographer refocus their pictures as they were editing them, moving the choice of depth-of-field, further afield.

The lytro, as the first computational photography camera, was an intellectual curiosity more than it was a revolution. It was also hard to know what to do with it, or how to market it and depth of field control simply seemed like the most attainable market I assume. Today however, with our vision of a fully articulated stereo capturing system for VR at a bit of a stall – perhaps those hundreds of little lenses may come to our rescue. Google seems to think they were onto something, and have jumped in to the VR world bringing all their unparalleled computational power with them.

Google Jump, and many other projects following the same logic, is an extension of the work done by Lytro, but aimed at using consumer cameras and distributed computing (cloud computing) to extend that level of information extraction from camera data to video.

The basic technologies have been around for years. Photogrammetry is as old as photography itself, even older maybe, but it really took off when computers could crunch the numbers. Photoscan, a desktop application for 3D reconstruction using stills and video is a mainstay in the visual effects, 3d printing, surveying, preservation, medical, aerospace and now – Virtual reality industry.

The basic premise is that by capturing a scene with enough cameras, the original state of the world can be computed, reconstructed and played back. People could move around and have the world appear as it was, and in stereo. For these technologies parallax is not the enemy, but the most important ingredient. As the distance between cameras increase, so does the information about the scene. Obscured objects are made visible, and depth is more easily calculated.

It takes an inordinate of computer power to accurately decipher even simply the depth from only flat camera views, and more still to re-arrange it in a way that can be played back. And there are problems that computers don’t understand yet, semi-transparent things like smoke are hard to describe, as are reflective things like mirrors – or even worse semi-transparent things that reflect, like windows. Lights reflecting off objects cause trouble, as they do now, because a highlight looks like it is part of an object to the computer. Reflections in general are a pain in any effects work, regardless of the amount of cameras used.

It will be many years before this technology is useful or even attainable to amateur filmmakers, but as with everything, as the small breakthroughs lead to bigger ones, the knowledge gets spread around and many of the challenges in the previous chapters will become far less so.

This area of research is not purely the dominion of Google, as many researchers form all over the world are all approaching the problem, like the lytro camera, from slightly different angles. Google’s involvement simply put it on the front page.

With so many great minds devoted to the problem, it is hard not to get excited.

Further Reading

Google Jump technology

Nozon spatial 3D rendering and playback

Otoy lightfield /holographic rendering and capture

Arnold Stereo rendering examination

Parallax from rendered images

Photogrammetric applications of immersive video cameras

Agisoft 4D Photogrammetry

SITE LINKS

INTRODUCTION CHAPTER 2 / THE BASICS CHAPTER 2 / CAPTURECHAPTER 3 / THE STITCHCHAPTER 4 / POST-PRODUCTIONCHAPTER 5 / DISTRIBUTIONADDENDUM / ADVANCED